AI-generated media sit somewhere between representational image — representations of data rather than reality — and posthuman artefact. This ambiguous nature suggests that we need methods that not just consider these images as cultural objects, but also as products of the systems that made them. I am following here in the wake of other pioneers who’ve bravely broken ground in this space.

For Friedrich Kittler and Jussi Parikka, the technological, infrastructural and ecological dimensions of media are just as — if not more — important than content. They extend Marshall McLuhan’s notion that ‘the medium is the message’ from just the affordances of a given media type/form/channel, into the very mechanisms and processes that shape the content before and during its production or transmission.

I take these ideas and extend them to the outputs themselves: a media-materialist analysis. Rather than just ‘slop’, this method contends that AI media are cultural-computational artefacts, assemblages compiled from layered systems. In particular, I break this into data, model, interface, and prompt. This media materialist method contends that each step of the generative process leaves traces in visual outputs, and that we might be able to train ourselves to read them.

Data

There is no media generation without training data. These datasets can be so vast as to feel unknowable, or so narrow that they feel constricting. LAION-5B, for example, the original dataset used to train Stable Diffusion, contains 5.5 billion images. Technically, you could train a model on a handful of images, or even one, or even none, but the model would be more ‘remembering’, rather than ‘generating’. Video models tend to use smaller datasets (comparatively), such as PANDA-70M which contains over 70 million video-caption pairs: about 167,000 hours of footage.

Training data for AI models is also hugely contentious, given that many proprietary tools are trained on data scraped from the open internet. Thus, when considering datasets, it’s important to ask what kinds of images and subjects are privileged. Social media posts? Stock photos? Vector graphics? Humans? Animals? Are diverse populations represented? Such patterns of inclusion/exclusion might reveal something about the dataset design, and the motivations of those who put it together.

Some datasets are human-curated (e.g. COCO, ImageNet), and others are algorithmically scraped and compiled (e.g. LAION-Aesthetics). There may be readable differences in how these datasets shape images. You might consider:

- Are the images coherent? Chaotic/glitched?

- What kinds of prompts result in clearer, cleaner outputs, versus morphed or garbled material?

The dataset is the first layer where cultural logics, assumptions, patterns of normativity or exclusion are encoded in the process of media generation. So: what can you read in an image or video about what training choices have been made?

Model

The model is a program: code and computation. The model determines what happens to the training data — how it’s mapped, clustered, and re-surfaced in the generation process. This re-surfacing can influence styles, coherence, and what kinds of images or videos are possible with a given model.

If there are omissions or gaps in the training data, the model may fail to render coherent outputs around particular concepts, resulting in glitchy images, or errors in parts of a video.

Midjourney was built on Stable Diffusion, a model in active development by Stability AI since 2022. Stable Diffusion works via a process of iterative de-noising: each stage in the process brings the outputs closer to a viable, stable representation of what’s included in the user’s prompt. Leonardo.Ai’s newer Lucid models also operate via diffusion, but specialists are brought in at various stages to ‘steer’ the model in particular directions, e.g. to verify what appears as ‘photographic’, ‘artistic’, ‘vector graphic design’, and so on.

When considering the model’s imprint on images or videos, we might consider:

- Are there recurring visual motifs, compositional structures, or aesthetic fingerprints?

- Where do outputs break down or show glitches?

- Does the model privilege certain patterns over others?

- What does the model’s “best guess” reveal about its learned biases?

Analysing AI-generated media with these considerations in mind may reveal the internal logics and constraints of the model. Importantly, though, these logics and constraints will always shape AI media, whether they are readable in the outputs or not.

Interface

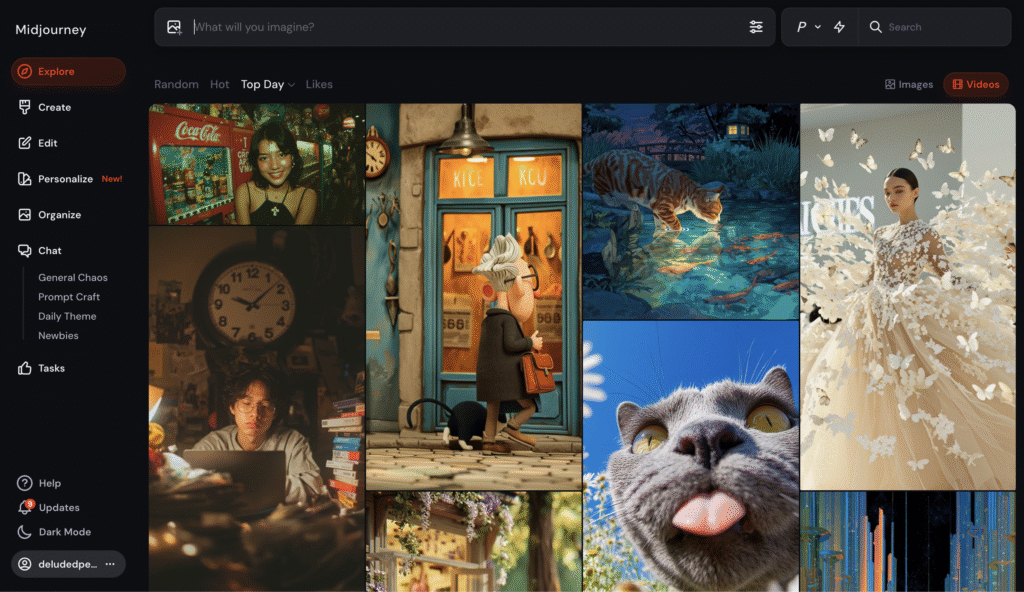

The interface is what the user sees when they interact with any AI system. Interfaces shape user perceptions of control and creativity. They may guide users towards a particular kind of output by making some choices easier or more visible than others.

Midjourney, for example, displays a simple text box with the option to open a sub-menu featuring some more customisation options. Leonardo.Ai’s interface is more what I call a ‘studio suite’, with many controls visible initially, and plenty more available with a few menu clicks. Offline tools like DiffusionBee and ComfyUI similarly offer both simple (DiffusionBee) and complex (ComfyUI) options.

When looking at interfaces, consider what controls, presets, switches or sliders are foregrounded, and what is either hidden in a sub-menu or not available at all. This will give a sense of what the platform encourages: technical mastery and fine control (lots of sliders, parameters), or exploration and chance (minimal controls). Does this attract a certain kind of user? What does this tell you about the ‘ideal’ use case for the platform?

Interfaces, then, don’t just shape outputs. They also cultivate different user subjectivities: the tinkerer, the artist, the consumer.

Reading interfaces in outputs can be tricky. If the model or platform is known, one can speak of the outputs in knowledgeable terms about how the interface may have pushed certain styles, compositions, or aesthetics. But even if the platform is not known, there are some elements to speak to. If there is a coherent style, this may speak to prompt adherence or to presets embedded in the interface. Stable compositions — or more chaotic clusters of elements — may speak to a slider that was available to the user.

Whimsical or overly ‘aesthetic’ outputs often come from Midjourney. Increasingly, outputs from Kling and Leonardo are becoming much more realistic — and not in an uncanny way. But both Kling and Leonardo’s Lucid models put a plastic sheen on human figures that is recognisable.

Prompt

While some have speculated that other user input modes might be forthcoming — and others have suggested that such modes might be better — the prompt has remained the mainstay of the AI generation process, whether for text, image, video, software, or interactive environment. Some platforms say explicitly that their tools or models offer good ‘prompt adherence’, ie. what you put in is what you’ll get, but this is contingent on your putting in plausible/coherent prompts.

Prompts activate the model’s statistical associations (usually through the captions alongside the images in training embeddings), but are filtered through linguistic ambiguity and platform-specific ‘prompting grammars’.

Tools or platforms may offer options for prompt adherence or enhancement. This will push user prompts through pre-trained LLMs designed to embellish with more descriptors and pointers.

If the prompt is known, one might consider the model’s interpretation of it in the output, in terms of how literal or metaphorical the model has been. There may be notable traces of prompt conventions, or community reuse and recycling of prompts. Are there any concepts from the prompt that are over- or under-represented? If you know the model as well as the prompt, you might consider how much the model has negotiated between user intention and known model bias or default.

Even the clearest prompt is mediated by statistical mappings and platform grammars — reminding us that prompts are never direct commands, but negotiations. Thus, prompts inevitably reveal both the possibilities and limitations of natural language as an interface with generative AI systems.

Sample Analysis

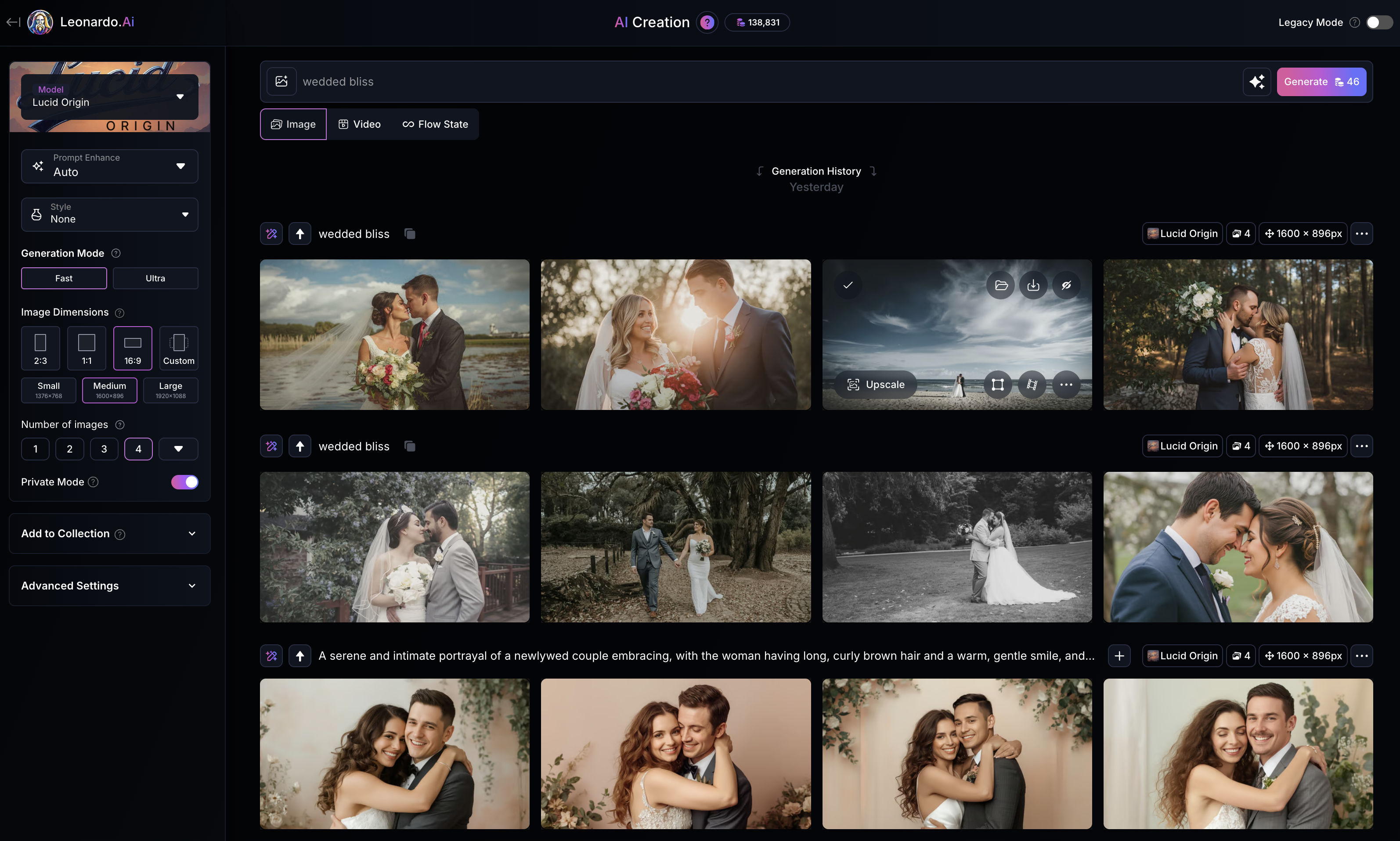

| Prompt | ‘wedded bliss’ |

| Model | Lucid Origin |

| Platform | Leonardo.Ai |

| Prompt enhancement | off |

| Style preset | off |

The human figures in this image are young, white, thin, able-bodied, and adhere to Western and mainstream conventions of health and wellness. The male figure has short trimmed hair and a short beard, and the female figure has long blonde hair. The male figure is taller than the female figure. They are pictured wearing traditional Western wedding garb, so a suit for the man, and a white dress with veil for the woman. Notably, all of the above was was true for each of the four generations that came out of Leonardo for this prompt. The only real difference was in setting/location, and in distance of the subjects from the ‘camera’.

By default, Lucid Origin appears to compose images with subjects in the centre of frame, and the subjects are in sharp focus, with details of the background tending to be in soft focus or completely blurred. A centered, symmetrical composition with selective focus is characteristic of Leonardo’s interface presets, which tend toward professional photography aesthetics even when presets are explicitly turned off.

The model struggles a little with fine human details, such as eyes, lips, and mouths. Notably the number of fingers and their general proportionality are much improved from earlier image generators (fingernails may be a new problem zone!). However, if figures are touching, such as in this example where the human figures are kissing, or their faces are close, the model struggles to keep shadows, or facial features, consistent. Here, for instance, the man’s nose appears to disappear into the woman’s right eye. When the subjects are at a distance, inconsistencies and errors are more noticeable.

Overall though, the clarity and confident composition of this image — and the others that came out of Leonardo with the same prompt — would suggest that a great many wedding photos, or images from commercial wedding products, are present in the training data.

Interestingly, without prompt enhancement, the model defaulted to an image presumably from the couples wedding day, as opposed to interpreting ‘wedded bliss’ to mean some other happy time during a marriage. The model’s literal interpretation here, i.e. showing the wedding day itself rather than any other moment of marital happiness, reveals how training data captions likely associate ‘wedded bliss’ (or ‘wed*’ as a wildcard term) directly with wedding imagery rather than the broader concept of happiness in marriage.

This analysis shows how attention to all four layers — data biases, model behavior, interface affordances, and prompt interpretation — reveals the ‘wedded bliss’ image as a cultural-computational artefact shaped by commercial wedding photography, heteronormative assumptions, and the technical characteristics of Leonardo’s Lucid Origin model.

This analytic method is meant as an alternative to dismissing AI media outright. To read AI images and video as cultural-computational artefacts is to recognise them as products, processes, and infrastructural traces all at once. Such readings resist passive consumption, expose hidden assumptions, and offer practical tools for interpreting the visuals that generative systems produce.

This is a summary of a journal article currently under review. In respect of the ethics of peer review, this version is much edited, heavily abridged, and the sample analysis is new specifically for this post. Once published, I will link the full article here.